Building SG Law Cookies: Insights and Reflections

I share my experience and process of generating daily newsletters from Singapore Law Watch using ChatGPT, serverless functions and web technologies. #Featured

Introduction

It's easy to be impressed with Large Language Models like #ChatGPT and GPT-4. They're really helpful and fun to use. There are a ton of uses if you're creative. For weeks I was mesmerised by the possibilities — this is the prompt I would use. Using this and that, I can do something new.

When I got serious, I found a particular itch to scratch. I wanted to create a product. Something created by AI that people can enjoy. It has to work, be produced quickly and have room to grow if it is promising. It should also be unique and not reproduce anything currently provided by LawNet or others.🤔

There is one problem which I felt I could solve easily. I've religiously followed Singapore Law Watch for most of my working life. It's generally useful as a daily update on Singapore's most relevant legal news. There's a lot of material to read daily, so you have to scan the headlines and the description. During busier days, I left more things out.

So… what can ChatGPT do? It can read the news articles for me, summarise them, and then make a summary report out of all of them. This is different from scanning headlines because, primarily, the AI has read the whole article. Hopefully, it can provide better value than a list of articles.

The Development Process

The code repository is available here.

The Easy Part — Prompts

Notwithstanding my excitement about ChatGPT and large language models, I spent the least time on it. Maybe it's because I had a lot of practice developing with ChatGPT.

Anyhow, I considered the prompts fairly satisfactory.

You are a helpful assistant that summarises news articles and opinions for lawyers who are in a rush. The summary should focus on the legal aspects of the article and be accessible and easygoing. Summaries should be accurate and engaging.

After asking it to summarise each article featured as a headline on the Singapore Law Watch website:

Now, use all the news articles provided previously to create an introduction for today's blog post in the form of a vivid poem which has not more than 6 lines.

The only problem I encountered was ensuring that the summaries were short. I asked ChatGPT to limit itself to 80 words, or 5 sentences. However, ChatGPT was always busting these limits no matter how many times I generated them. Then I found out ChatGPT can't count.

There might be a more ChatGPT-ish way to beat this limitation, but I couldn't be bothered because I was using #Python and the API.

for article in articles:

r = requests.get(article.source_link)

# ...

messages = article_summary_prompt.format_prompt(article=article_content).to_messages()

result = chat(messages)

while len(result.content) > 450:

messages.append(result)

shorter_template = "Now make it more concise."

shorter_message_prompt = HumanMessagePromptTemplate.from_template(shorter_template)

messages = messages + shorter_message_prompt.format_messages()

result = chat(messages)

summaries.append((result.content, article.source_link))

Basically, I would tell ChatGPT to rewrite the summary until it does not bust the word limit.

Going #Serverless At Last

I have always been interested in serverless but haven't found a use for it. The idea of not dealing with infrastructure and using cloud computers only on demand was enticing. However, its limits in terms of memory and execution time made it difficult for me to shift from writing scripts to microservices.

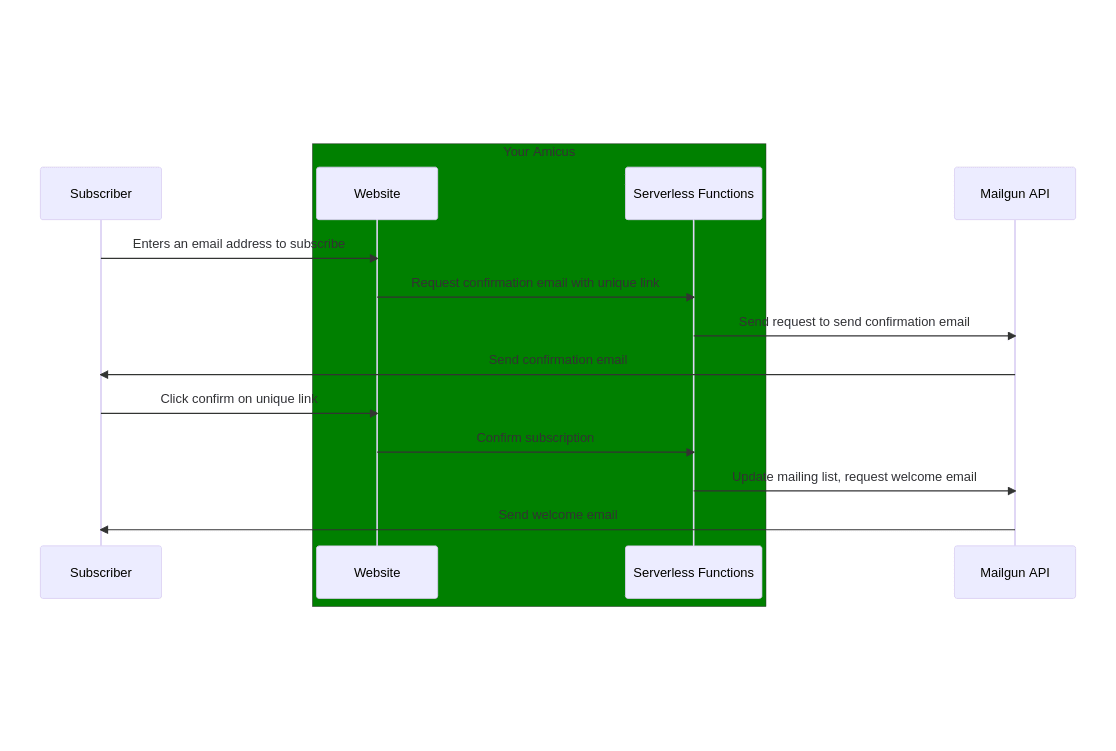

I knew from the start when I was designing this service that it would be available in various formats. A website (more on that in a while) and an RSS feed were rather straightforward.

The more challenging format was the email newsletter. The challenge was not the content — everything was pushed through #Jinja templates, which weren't new to me due to my experience with #docassemble.

The challenge was the workflow.

I found serverless functions to be great for calling other APIs with some business logic. The MailGun API essentially sends emails, so the program just needs to tell it what to send. As it only needs to send requests to another server, the requirements of the actual function weren't heavy.

Given the still very generous free limits for serverless functions, I wouldn't need to pay for these functions in the foreseeable future. I also get to keep my zippy and free static website.

Now this is Continuous Deployment

I did not have as much luck with automatically updating the website. Including the #openai package in the serverless function apparently busted its space limitations. I guess I could figure out how to “editorialise” my package requirements further to make them fit, but I got better things to do with my life.

Luckily for me, #GitHub Actions for public repositories has generous use limits for free accounts without space and memory restrictions.

So, I would set my GitHub Action to run my main Python script every week day. This generates a new page for the Hugo website, which I then commit and push to the main repository. This triggers the static site to build automatically, sending it out to the world wide web. The website is thus fully automated… until I decide to break it.

GitHub Actions are, unfortunately, much harder to debug and test. Composing your code using public actions definitely reduced errors (as long as you know how to use them), but I had to wait for the Action to run before I knew whether it was working. It also made my Git log a public graveyard of past errors.

Update: it's possible to test run your actions locally and more effectively using act.

Results

At the end of this sprint, I developed a program which generated daily newsletters summarising the news headlines from the SingaporeLawWatch website using ChatGPT. It could be accessed in three ways:

- A website

- An RSS feed

- An email newsletter subscription (the signup box is at the foot of each page)

The process is fully automated, so I am producing content in my sleep.

The power of large language models also allows content to be re-purposed in different ways. For example, I grew sick of reading the main summary of today's news, which sometimes felt like a regurgitation of another summary. I decided to experiment using a poem summary instead. Here's one of those poems:

Singapore's legal updates we bring,

News of drug rampages and murder's sting,

Money mules beware, laws may soon swing,

SINGPASS shares could lead to imprisonment's cling,

Stay tuned for justice's next fling,

As our summaries continue to sing.

I believe it would have been impossible to get anyone to summarise the news in a stanza with a rhyming scheme every day. I think it is a big improvement for a reader to decide whether to read in more detail quickly.

As mentioned, the hosting costs are within free limits, so the only costs I am racking up currently are from using the OpenAI API, which amount to about $0.02 a day. That's all right for a hobby project.

I guess in the foreseeable future, I am going to keep this project running on autopilot. Do use it and let me know what you think!

The Way Forward

There are obvious ways to expand this project:

- More ways to deliver: I am definitely interested in a Mastodon Bot or a Telegram/Discord bot.

- More sources to include: Right now, it’s based only on the headlines from the Singapore Law Watch. There are a lot more sources of legal news, including newspapers, government websites and other feeds.

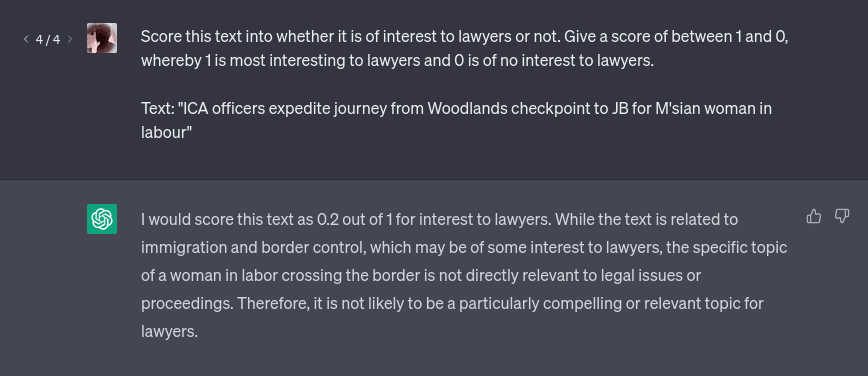

However, personalising the news and updates is definitely the ultimate goal. Richard Susskind’s “The End of Lawyers?” mentions “personalised alerting” on page 67 as a current trend in technology. The idea is that every lawyer, whether in Singapore or not, and no matter what area of law he or she is involved or interested in, gets his or her personal newspaper.

I am not sure whether every lawyer in Singapore truly wants his or her own personal legal newspaper. However, I am sure that the possibility is within reach. One oft-overlooked achievement of large language models is that they perform traditional natural language processing tasks such as classification. Witness this beautiful response from ChatGPT:

Now that's what I call an AI assistant.

Unfortunately, large language models can’t solve everything. The bigger problem is in the backend. How would information be stored and used to produce a personalised newsletter? In the end, the most critical question in artificial intelligence, despite the gazillions of bytes already used to train such models, is still data.

I hope to develop an API as the next biggest update of this project. Hopefully, with my added experience in serverless functions and cloud deployment, I wouldn’t fall short this time.

Conclusion

I had a fun few weeks developing this project, and I don’t regret letting this blog go quiet.

Do try SG Law Cookies, and let me know if you have any feedback! Let’s keep building!

Love.Law.Robots. – A blog by Ang Hou Fu

- Discuss... this Post

- If you found this post useful, or like my work, a tip is always appreciated:

- Follow [this blog on the Fediverse]()

- Contact me: