Comparing approaches to regulating Generative AI

I compare two discussion papers on possible approaches to regulating the use of generative #AI.

Given the coming AI Apocalypse, you would expect tech companies to barrel down and make their products safe at all costs. Well, you know they are completely focused on averting the Cthulu apocalypse... when they are on a world tour.

Since we are not going to get much of a hand from companies like #OpenAI, it's up to users to figure out how to do AI safety by themselves. I've been fortunate to come across two new approaches to share with my readers.

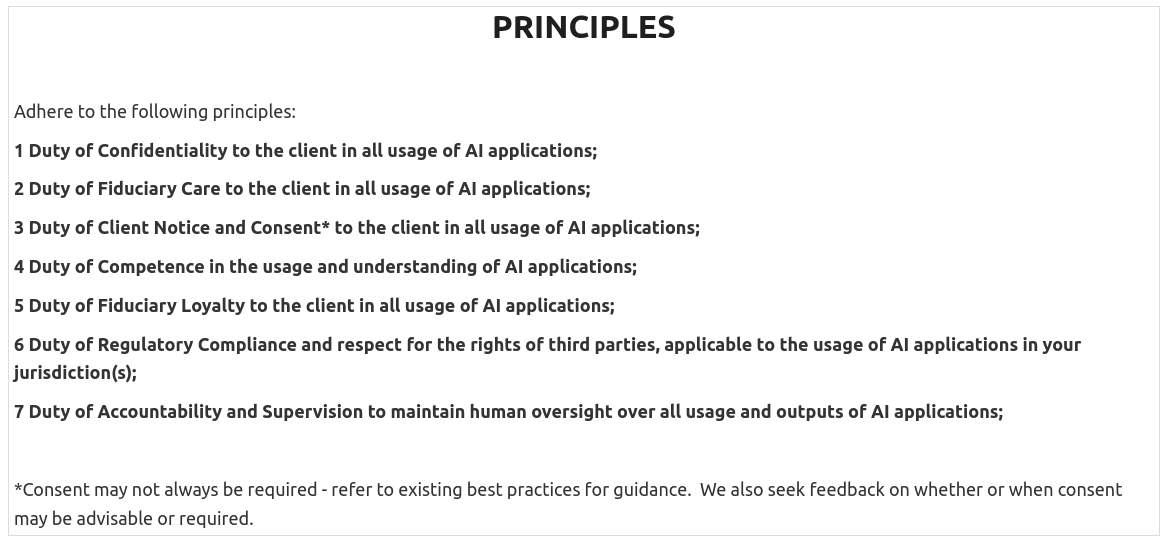

1. MIT Computational Law's “Task Force on Responsible Use of Generative AI for Law”

It's amazing how one well-publicised screw-up can lead to action. The hapless lawyers in this sorry exercise of using ChatGPT instead of research blame the attention on “schadenfreude”, but we will have our fun while the party lasts.

It's an early draft, but its simplicity is breathtaking.

Since lawyers often regard themselves as fiduciaries and officers of the court, such duties are easy to understand for the audience. This is also probably a great starting point for a policy on AI for law firms, and even corporate legal departments. For conservative organisations to communicate stringent standards to their employees, the guidelines are also worth considering.

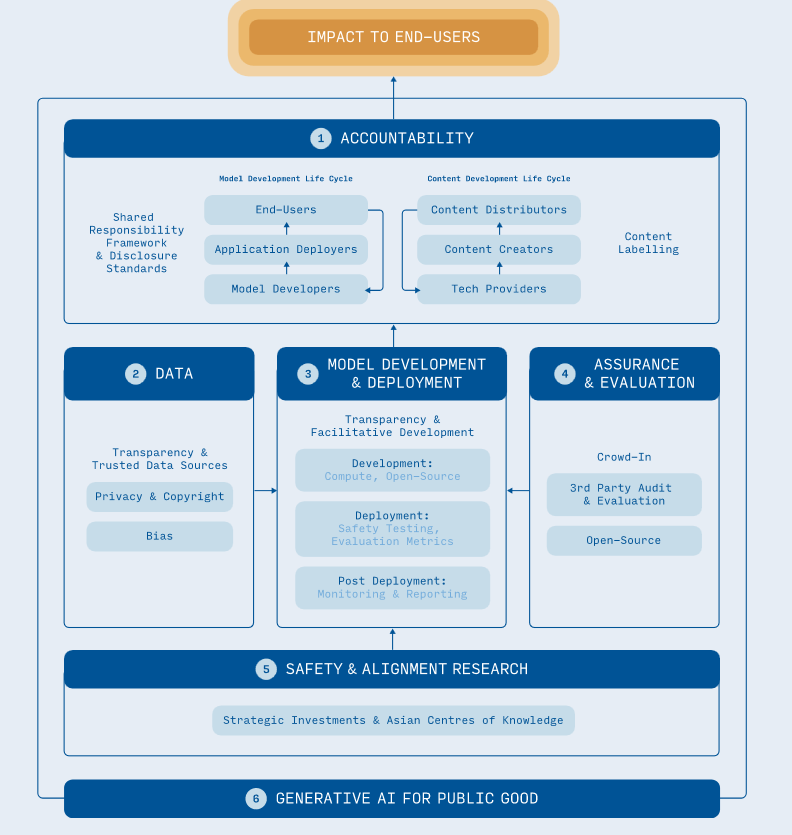

2. Singapore IMDA and Aicadium's Discussion Paper on Singapore's approach to building an ecosystem for trusted and responsible adoption of Generative AI

Last week in Singapore, the “AI Verify Foundation” was launched to “harness the collective power and contributions of the global open source community to develop AI testing tools for the responsible use of AI”. I was a bit confused by the reference to the global “open source community” since the foundation's membership was a veritable list of commercial powerhouses. (I would note for fairness that Red Hat is probably as close as one can get to an “open source” company.)

The government authority, IMDA, worked with a Temasek-linked company to pen a discussion paper.

It's not as easy to process as the previous document, so I will try to highlight what I found interesting for the topic of this post.

- Clearer accountability through a “shared responsibility framework” familiar in cloud deployments (read: AI companies are responsible for their models, but developers and users are also responsible for how they use such resources/models)

- Enhance transparency via a set of information disclosure standards (ala nutrition labels)

- Platforms and producers should label AI-generated content

- Policymakers should issue initial data privacy and copyright guidelines for generative AI

- Developers need to be transparent about model development and deployment objectively and consistently.

- Careful deliberation and a calibrated approach towards regulation should be taken while investing in capabilities and the development of governance standards and tools.

- Public-private partnerships will be a key avenue to accelerate work in this area.

Comparing the approaches

I think an apple-to-apple comparison is difficult, mainly because the scope of the discussion is different – the MIT task force focuses on lawyers as users, and the IMDA paper tries to outline an approach to regulating an industry.

But really, what is the nub of the question? I think it depends on how much responsibility we expect from the end user.

I personally prefer the MIT task force's version as it says what are the aspects of risks of generative AI and how it should be dealt with ideally.

However, it requires a well-informed and sophisticated end user. Many, unfortunately, don't know they don't qualify. If we followed the guidelines strictly, most uses will be significantly delayed or curtailed.

The IMDA's approach of shared responsibilities, transparent accountability and partnerships is more permissive. I also think it's the industry's favoured regime. I also find it remarkable how familiar it is to regulation models available currently.

The obvious criticism is that it is shown that it doesn't work. Notwithstanding the love shown all around, there will invariably be conflicts of interest. The Singapore government doesn't hesitate to issue legal notices regarding Internet content regulation.

This is a developing area which depends on the actions of many players. It remains to be seen what we will end up with.

It also means it will depend on how all of us use this new technology, including most definitely, people who shoot themselves in the foot using it.

Love.Law.Robots. – A blog by Ang Hou Fu

- Discuss... this Post

- If you found this post useful, or like my work, a tip is always appreciated:

- Follow [this blog on the Fediverse]()

- Contact me: