Oscillator Drum Jams: The Asset Pipeline

This is the second post in a series about my new app Oscillator Drum Jams. Start here in Part 1.

You can download Oscillator Drum Jams at oscillatordrums.com.

To start making this app, I couldn’t just fire up Xcode and get to work. The raw materials were (1) a PDF ebook, and (2) a Dropbox folder full of single-instrument AIFF tracks exported from Jake’s Ableton sessions. Neither of those things could ship in the app as-is; I needed compressed audio tracks, icons for each track representing the instrument, and the single phrase of sheet music for every individual exercise.

Processing the audio

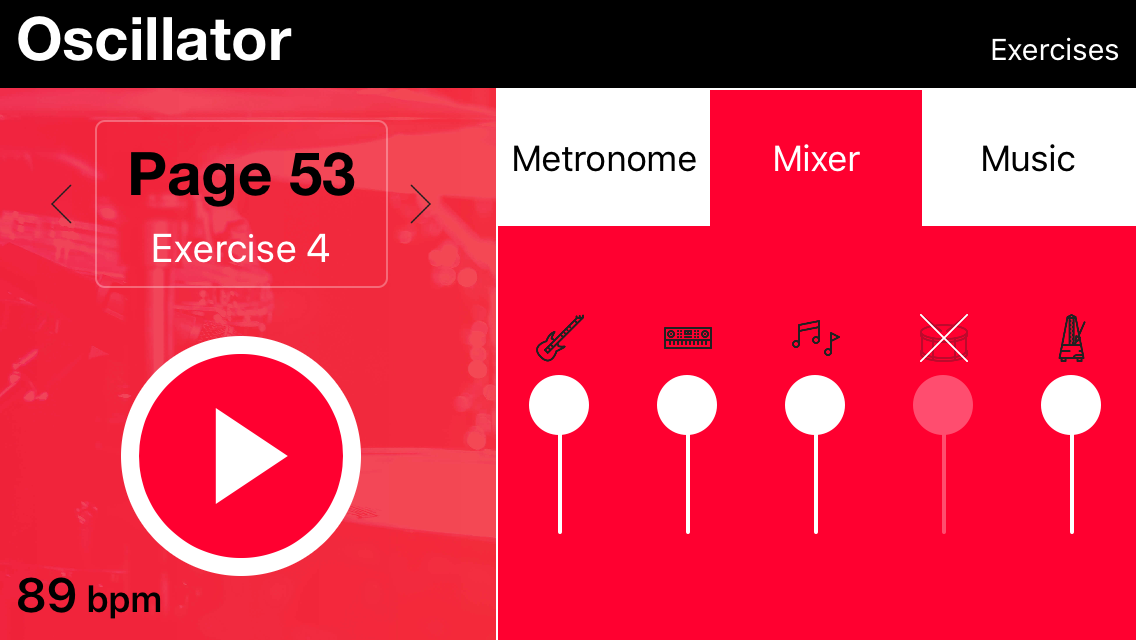

Each music loop has multiple instruments plus a drum reference that follows the sheet music. We wanted to isolate them so people could turn them on and off at will, so each exercise has 3-6 audio files meant to be played simultaneously.

Jake made the loops in Ableton, a live performance and recording tool, and its data isn’t something you can just play back on any computer, much less an iPhone. So Jake had to export all the exercises by hand in Ableton’s interface.

We had to work out a system that would minimize his time spent clicking buttons in Ableton’s export interface, and minimize my time massaging the output for use in the app. Without a workflow that minimizes human typing, it’s too easy to introduce mistakes.

The system we settled on looked like this:

p12/

#7/

GUITAR.aif

BASS.aif

Drum Guide.aif

Metronome.aif

#9/

BASS.aif

Drum Guide.aif

GUITAR.aif

Metronome.aif

p36 50BPM triplet click/

#16/

Metronome.aif

GUITAR.aif

BASS.aif

RHODES.aif

MISC.aif

Drum Guide.aif

The outermost folder contains the page number. Each folder inside a page folder contains audio loops for a single exercise. The page or the exercise folder name may contain a tempo (“50BPM”) and/or a time signature note (“triplet click”, “7/8”). This notation is pretty ad hoc, but we only needed to handle a few cases. We changed the notation a couple of times, so there were a couple more conventions that work the same way with slight differences.

I wrote a simple Python script to walk the directory, read all that messy human-entered data using regular expressions, and output a JSON file with a well-defined schema for the app to read. I wanted to keep the iOS code simple, so all the technical debt related to multiple naming schemes lives in that Python script.

The audio needed another step: conversion to a smaller format. AIFF, FLAC, or WAV files are “lossless,” meaning they contain 100% of the original data, but none of those formats can be made small enough to ship in an app. I’m talking gigabytes instead of megabytes. I needed to convert them to a “lossy” format, one that discards a little bit of fidelity but is much, much smaller.

I first tried converting them to MP3. This got the app down to about 200 MB, but suddenly the beautiful seamless audio tracks had stutters between each loop. When I looked into the problem, I learned that MP3 files often contain extra data at the end because of how the compression algorithm works, making seamless looping very complex. MP3 was off the table.

Fortunately, there are many other lossy audio formats supported on iOS, and M4A/MPEG-4 has perfect looping behavior.

Finally, because Jake’s Ableton session sometimes contains unused instruments, I needed to delete files that contained only silence. This saved Jake a lot of time toggling things on and off during the export process. I asked FFmpeg to find all periods of silence in a file, and if a file had exactly one period of silence exactly as long as the track, I could safely delete the file.

Here’s how you find the silences in a file using FFmpeg:

ffmpeg

-i <PATH>

-nostdin

-loglevel 32

-af silencedetect=noise=\(-90.0dB):d=\(0.25)

-f null

-

Here’s how the audio pipeline ended up working once I had worked out all the kinks:

1. Loop over all the lossless AIFF audio files in the source folder.

2. Figure out if a file is silent. Skip it if it is.

3. Convert the AIFF file to M4A and put it in the destination folder under the same path.

4. Look at all the file names in the destination folder and output a JSON file listing the details for all pages and exercises.

Creating the images

The exercise images were part of typeset sheet music like this:

There were enough edge cases that I never considered automating the identification of exercises in a page, but I also never considered doing it by hand in an image editor either. No, I am a programmer, and I would rather spend 4 hours writing a program to solve the problem than spending 4 hours solving the problem by hand!

I started by using Imagemagick to convert the PDF into PNGs. Then I wrote a single-HTML-file “web app” that could use JavaScript to display each page of sheet music, with a red rectangle following my mouse. The JavaScript code assigned keys 1-9 to different rectangle shapes, so pressing a key would change the size of the rectangle. When I clicked, the rectangle would “stick” and I could add another one. The points were all stored as fractions of the width and height of the page, in case I decided to change the PPI (pixels per inch) of the PNG export. I’m glad I made that choice because I tweaked the PPI two or three times before shipping.

Here’s what that looked like to use:

The positions of all the rectangles on each page were stored in Safari’s local storage as JSON, and when I finished, I simply copied the value from Safari’s developer tools and pasted it into a text file.

Now that I had a JSON file containing the positions of every exercise on every page, I could write another Python script using Pillow to crop all the individual exercise images out of each page PNG.

But that wasn’t enough. The trouble with hand-crafted data is you get hand-crafted inconsistencies! Each exercise image had a slightly different amount of whitespace on each side. So I added some code to my image trimming script that would detect how much whitespace was around each exercise image, remove it, and then add back exactly 20 pixels of whitespace on each side.

I still wish I had found a way to remove the number in the upper left corner, but at the end of the day I had to ship.

Diagrams of the asset pipeline