Christmas won't be here for a while

Houfu dreams of having his #MachineLearning tool for years but will still have to wait longer.

Back in the pre-#ChatGPT days, I had long wanted to make my machine-learning model but eventually never got around to it because of the lack of data I had. In this sense, “lack of data” didn't mean I was stuck in a desert. There was lots of data lying around; it just didn't make sense because it was trapped in scanned PDFs.

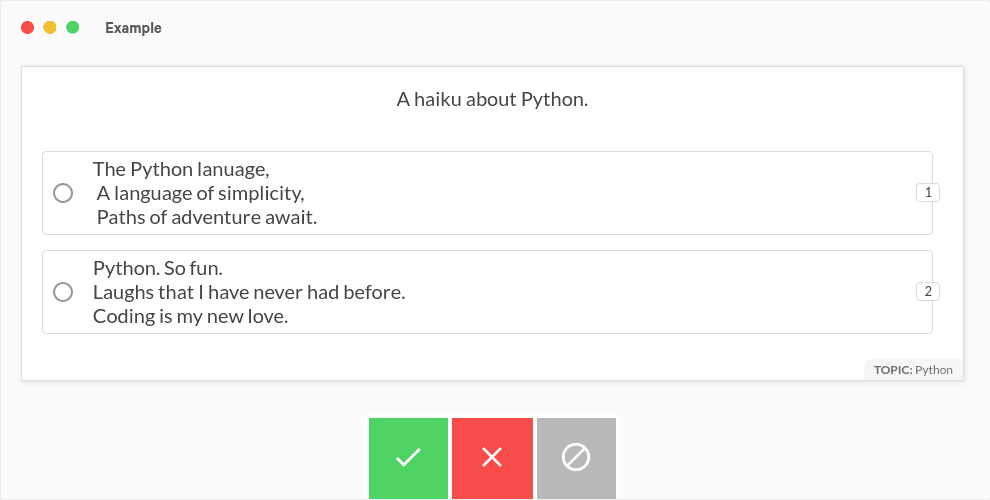

Another aspect of this problem was that it had to be adequately annotated before the machine could detect information like named entities or classify them. For that #annotation work, I have dreamt of using #Prodigy, from the makers of #spaCy. I still have the four-button sticker which you use to confirm your annotation!

In another quaint example of how ChatGPT has upended this field, annotation is now less important since large language models are pretty good at classification, sentiment analysis or detecting entities in a few-shot or zero-shot scenario.

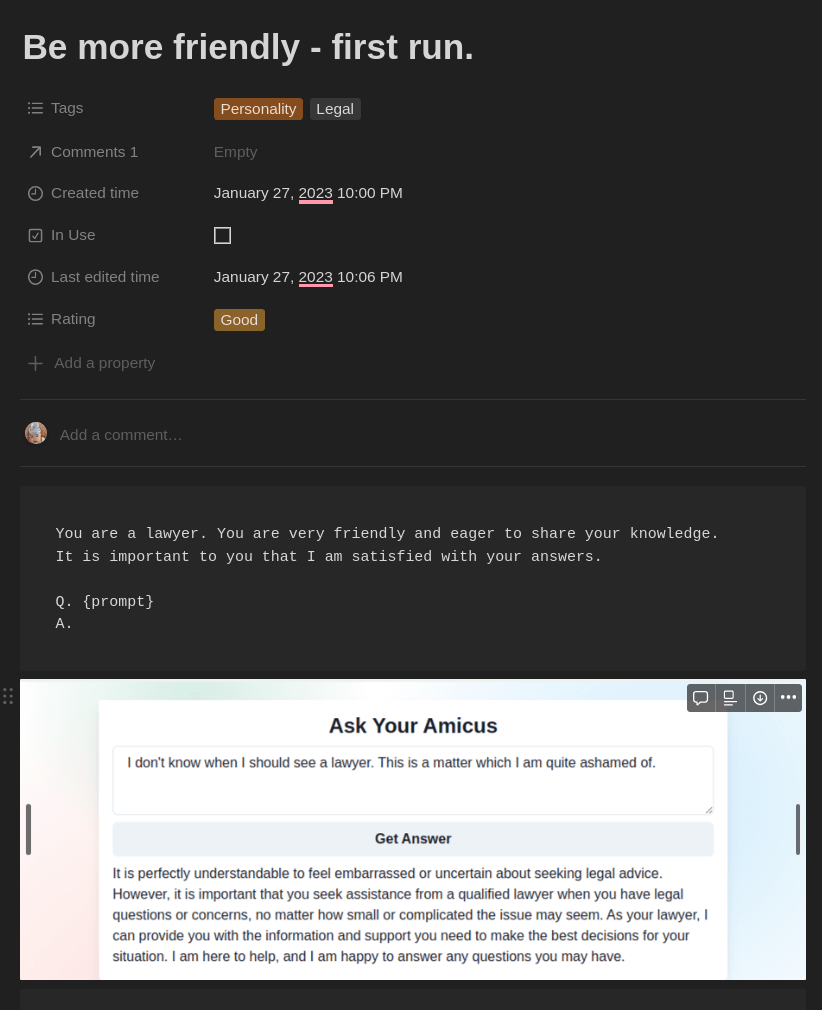

Unfortunately, this large language model has created a new problem. I don't know how to handle all my creativity regarding #prompts. If I don't commit them to git, I will lose them quite quickly. Even if I remember all of them, how do I know which prompt was effective? It would be good to collect them so I can also test them on a new model.

Currently, it's still rather ad-hoc. I... I keep them in my #Notion and rate them with my notes on what I thought of their effectiveness and room for improvement.

It turned out to be tedious and quite hard to improve. It reminded me of a spellbook filled with incantations.

So it was heartening to see that the new version of Prodigy has some new workflows for prompt engineering:

In order to help you make an informed decision on the optimal prompt for your project, we have added a couple of workflows for prompt engineering.

— Explosion ? (@explosion_ai) July 6, 2023

We particularly recommend the tournament recipe where prompts compete with each other in duels!https://t.co/TUURJoMh8A pic.twitter.com/5PwUDyYFqy

The idea of a prompt tournament and generating tests with OpenAI integration will help check how effective my prompts can be.

Alas, the USD390 price tag is still putting me off all these years. I know it's value for money, but I can't pay for it. However, it's great knowing they are going in the right direction. Maybe when they have added more prompt engineering and LLM solutions (and a healthy dose of inflation), I will no longer be able to resist it.

In any case, this must be a clear sign that prompt engineering is here to stay, and rather than a mysterious art, it can be a science too.

Love.Law.Robots. – A blog by Ang Hou Fu

- Discuss... this Post

- If you found this post useful, or like my work, a tip is always appreciated:

- Follow [this blog on the Fediverse]()

- Contact me: